The Kappa Statistic or Cohen’s Kappa is a statistical measure of inter-rater reliability for categorical variables. These statistics are important as they represent the extent to which the data collected in the study are correct representations of the variables measured. Learn more about Kappa Statistics here.

Screening Agreement #

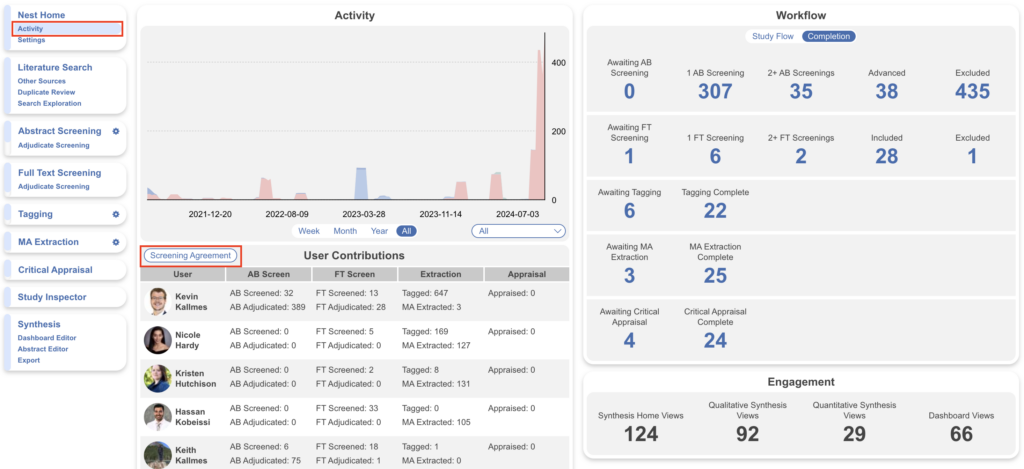

Nested Knowledge provides the built-in analytic in Activity when a Dual Screening mode is switched on, and screening has been performed by multiple reviewers. To access this information, click on Activity, then Screening Agreement next to User Contributions (see below).

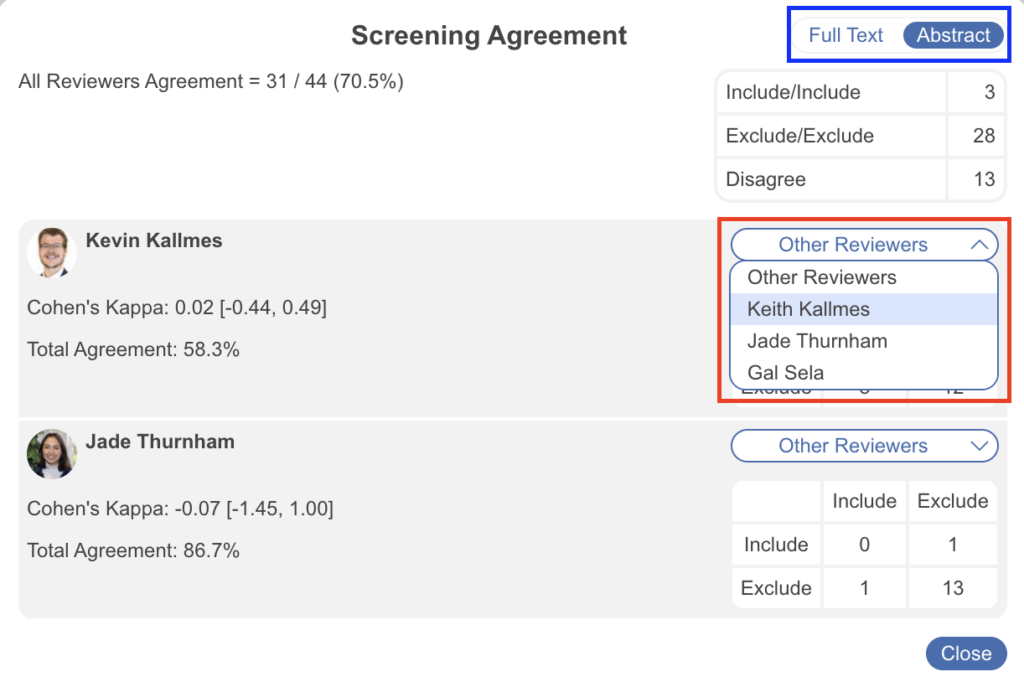

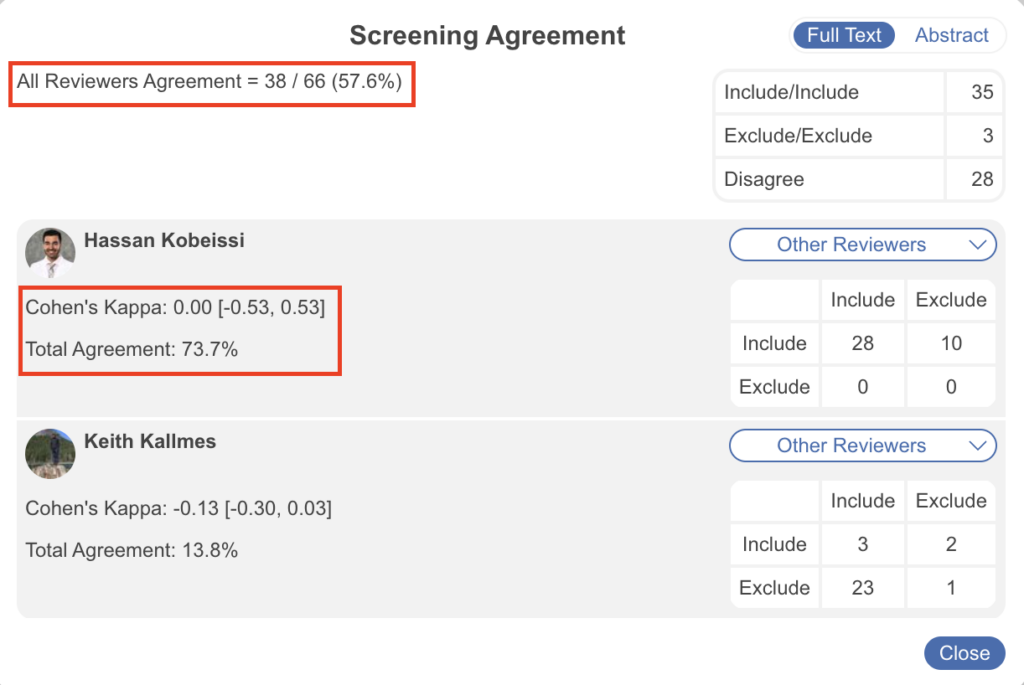

Clicking Screening Agreement opens up a modal. This modal provides you with live kappa scores as well as inter reviewer reliability across each team member:

Stats can be compared between specific reviewers by utilizing the “Other Reviewers” dropdown (red) and, if in Dual Two Pass mode, at the Abstract or Full Text Screening level (blue).