How Smart Study Type Tags Are Reinventing Evidence Synthesis

One of the features of Core Smart Tags is Smart Study Type – this refers to our AI system that automatically categorises the study type

No person can keep up with the flood of published biomedical research: there is too much information. The answers to key clinical and regulatory questions are dispersed across a mass of published literature. How do medical researchers sift through all this research? In general, they use “evidence synthesis,” the key process of aggregating relevant information and narrowing in on findings.

Evidence synthesis, in theory, reduces the amount of biomedical information. It involves gathering a set of published scientific papers–often from randomized controlled trials (RCTs)–and extracting the information most critical to advancing scientific understanding on key research questions. The amount of relevant knowledge is narrowed and clarified, making scientific consensus more obtainable. For example, thirty clinical trials evaluating heart failure medications might be condensed into one all-encompassing systematic review (SR). Doctors, guidelines authors, and health insurance payers, overwhelmed with trials to consume, can find clinical answers succinctly through review articles. In theory.

In reality, evidence synthesis creates new information: articles, data, figures, and sometimes policy guidelines. A systematic review with poor or incomplete methods generates new research waste. A systematic review with comprehensive methods, but low-quality underlying trials, generates research uncertainty. In some cases, a review article might even generate a new meta-review analyzing the quality of the original review. Parallel systematic reviews answering similar clinicals questions, such as the effectiveness of psychotherapy, often reach inconsistent conclusions, necessitating further evidence synthesis. Reducing information, therefore, often generates more information.

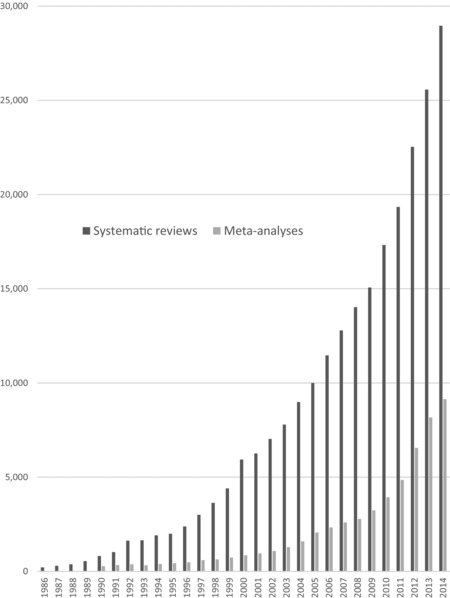

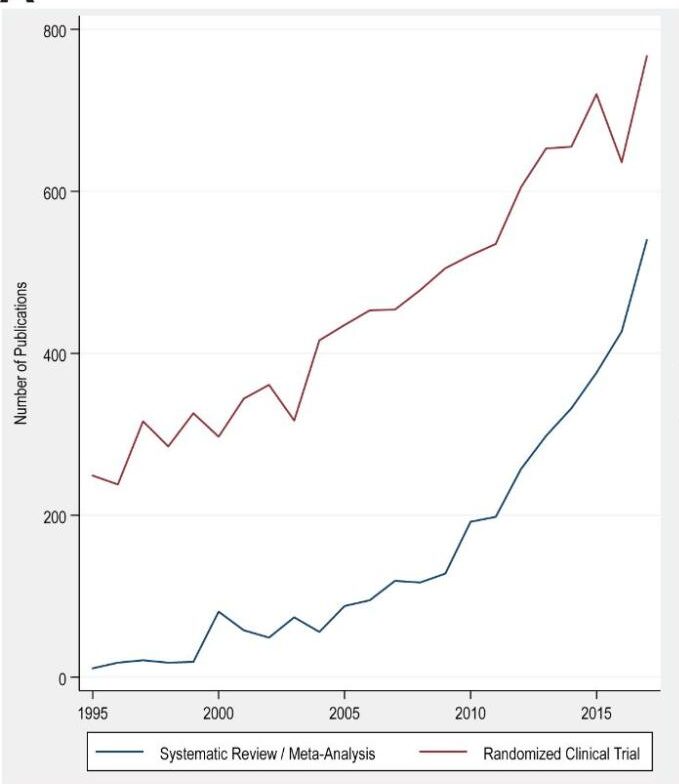

Most notably, most evidence synthesis projects are one-off collections that are published once and then fall out of date, necessitating duplication of effort to create newer syntheses. From 1991 to 2014, the growth rate of published systematic reviews (2750%) and meta-analyses (2600%) exceeded the growth rate of RCTs (153%) across all articles indexed in PubMed. In the pediatric literature, the growth rate of systematic reviews exceeded that of RCTs by 23-fold! This rapid growth may reflect the widespread adoption of meta-analyses in the 90s, the pressure to publish research, and the relatively low cost of reviews compared to new primary studies.

Literature reviews are an economical way to do research, requiring few start-up costs compared to controlled experiments and longitudinal studies, which demand equipment and span multiple years. Reviews and meta-analyses are often an excellent way to introduce young investigators to research! This relative ease of access, however, may contribute to the rapid proliferation of published reviews that may suffer from methodological issues–and that do more to muddle the consensus than clarify.

Evidence synthesis, as it is practiced now, can contribute to the dilution of biomedical information–but this should be considered a separate issue from the quality of the underlying studies. Many problems persist in systematic review methods, directly compromising the validity and usefulness of their results. According to a living review of SR flaws, common problems include insufficient literature searches, flawed risk of bias processes, lack of a published protocol, a single reviewer screening and extracting data, and poor consideration of publication bias. Some investigators estimate that only 3 in 100 SRs contain clinically useful findings.

However, even high-quality systematic reviews can determine that there is insufficient evidence, leaving some policy-makers frustrated that SRs add, rather than eliminate, uncertainty. Highlighting what we do not know is critical for advancing science! However, this pattern contributes to the displeasure with systematic reviews felt by decision-makers. Communication of evidence synthesis could be improved by more explicit methods for calling out evidence quality in underlying studies alongside improvements in the actual search, screening, and bias assessment methods used in these reviews.

Outdated and Underpowered

Review articles become out of date quickly; about 23% of reviews are out of date within 2 years and 7% are out of date at the time of publication. Studies included in SRs are underpowered, meaning the sample size is too small to adequately reveal the effects of the intervention compared to placebo. An analysis of more than 14,000 meta-analyses found that, in 70% of meta-analyses, all of the included studies are underpowered. The earnest effort from researchers to drill down on answers has the unintentional effect of adding to the problem of too much information of unreliable quality.

Removing Information

Removing information can be critical for growth! The benefit of subtraction shows up in many processes. Removing a species can enliven an ecosystem. Removing a large road can improve traffic. Perhaps… removing outdated evidence synthesis can improve the quality of the literature. This possibility is within our grasp–if evidence synthesis can be moved toward a living, updatable paradigm.

There are few incentives that would push researchers to set up a high-quality, updatable review rather than rapidly publishing an easier, lower-quality, one-off systematic review. Journals earn revenue from publishing papers. Scientists must publish often to obtain funding and stay afloat. Industry leaders must publish research to reach clinicians. Without changes to the incentive system in the publication of research–by rewarding high-quality evidence synthesis methods and persistence in updating datasets–we will struggle to meaningfully build upon previous research.

Though some incentives may not push toward higher-quality syntheses, the incentives of consumers of literature likely are toward innovation. These consumers of literature—clinicians, payers, patients—are constrained by time and cognitive burden to find, judge quality, and interpret systematic reviews. This has led to some pressure to innovate, but the push to innovate has not yet overthrown the status quo. The consumers of literature are spread out and have no coherent voice, and are therefore dependent on improvements in the methods that are out of their control.

Living Evidence and the Human Body

The human body has a similar problem where it has too much information to read and review: too much genetic material and too many signals from the brain. Unlike the diffuse audience that reads and uses medical evidence, the human body is subject to specific constraints. It has finite energy. It must have functional communication or perish. The body has adopted workflows that require it to delete information as often as it adds information. Genes are turned off to ensure that only relevant tasks are undertaken by a cell. Cells in the brain undergo synaptic pruning, snipping off the extra bits. Cells elsewhere undergo pre-programmed cellular death, preventing them from reproducing and becoming tumors. Even the mental capabilities related to sight are often repurposed in the blind via neuroplasticity. Workflows to replace the outdated, excess information will be essential for finding meaning in the clinical literature.

“Living systematic review” (LSR) refers to a published research synthesis that is replaced and updated as new evidence emerges. The name is well-suited because living reviews are subject to the same kind of constraints on information as living organisms. Living reviews assume we inhabit an environment where resources are tight and we must convey evidence effectively. The LSR may even provide a solution to the problem of continued proliferation of low-quality, duplicative reviews, as a single review on a topic can be carefully design, maintained, and published. LSRs are recommended in fields where new evidence is likely to emerge or the current evidence is inconclusive, and research consumers such as Health Technology Assessment bodies are already considering moving toward using updatable evidence synthesis for assisting with reimbursement decisions.

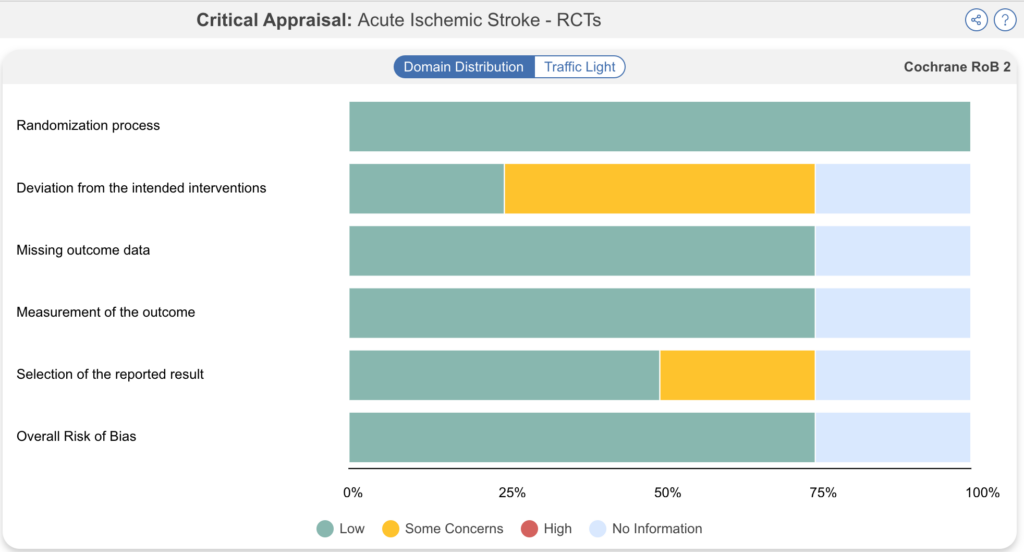

Nested Knowledge offers a platform for living reviews, where researchers rapidly refresh literature searches, incorporate new evidence, and watch as interactive tables and figures automatically update. Nested Knowledge supports rigor and transparency in reviews through features such as dual screening, dual data extraction, research protocols, critical appraisal (formerly ‘risk of bias’) and PRISMA reporting. By providing an environment for performing naturally-updatable evidence synthesis with transparent, rigorous methods, we hope to help researchers take on the Evidence Synthesis Contradiction while providing research consumers with up-to-date, easily-interpretable syntheses on key research topics.

Neurosurgeons at the Mayo Clinic and Stanford published a living systematic review using Nested Knowledge in the journal Interventional Neuroradiology. The researchers performed a SR comparing therapies for treatment of stroke with large core volume, and they included trials until February 2023. When a new clinical trial enrolling 300 patients was published in June 2023, the researchers updated the review to include new data.

To be effective, evidence synthesis must shorten rather than lengthen the body of must-read literature. By updating living systematic reviews, we can minimize redundant and wasteful research, replacing past work with the latest data. LSRs with transparent, comprehensive methods are an effective tool for promoting research integrity. Nested Knowledge allows scientists and other professionals to rapidly update literature searches, extract the latest data, and share updated results in an interactive dashboard.

Yep, you read that right. We started making software for conducting systematic reviews because we like doing systematic reviews. And we bet you do too.

If you do, check out this featured post and come back often! We post all the time about best practices, new software features, and upcoming collaborations (that you can join!).

Better yet, subscribe to our blog, and get each new post straight to your inbox.

One of the features of Core Smart Tags is Smart Study Type – this refers to our AI system that automatically categorises the study type