July 1, 2022

This article summarizes the findings from a recently-published feature analysis of systematic review software authored by the Nested Knowledge team.

Imagine you’re looking to buy a new car, but can’t find one that has all the features that make a car, a car. You really like the interior of the new Mercedes E-Class, but unbelievably, the designers left out the seats. The Tesla Model 3 can do 0-60 faster than you can gasp, but Elon inexplicably forgot windows. Frustrated, you think about buying an old, used Honda…but most cars from that era inexplicably lack any way to refill the tank, so they can really only be used once.

Which systematic review tools are ready for a test drive?

Obviously, none of these car companies would go to market with products that were not “feature complete” from the perspective of a normal driver. Unfortunately, that is not the case for systematic review software: scientific researchers looking to find, filter, and compare evidence from the published literature often have to make tough choices about which key features they will live without. Sometimes, the features that are missing are as important to the review process as seats, windows, and wheels are to driving. Any scientist who doesn’t check to make sure she is using a tool that does everything she needs may end up stranded halfway through the process. We think that’s a colossal waste, so we set out to see if any current tool enables a review from start to finish. But, like our car engineers above, we also had to answer the question, “what features do we need in a product before we can take it for a test drive?”

What do scientists demand?

We have to admit here, we aren’t actually trying to buy a car, we’re trying to build one! So, being conflicted engineers rather than unbiased scientists, we searched the literature and found four independent feature analyses (by Mierden, Harrison, Marshall, and Kohl) that, between them, listed 30 features that are non-negotiable in the review process. Some of the feature demands, like “ability to screen studies” or “ability to export results”, are central to any reviewer’s process, but some of the needs were very specific and technical. For instance:

We were most excited about some of the more high-octane and modern additions, like “virtual collaboration” (ability to chat and add notes to specific studies) and “living review” (updatability of every step in the software), which we thought would also move the field forward if they could be offered across review tools.

What are the options?

Though there are over 240 tools for systematic review on the SR Toolbox, only 24 are functioning, web-based softwares for pre-clinical or clinical review. These range from academic projects to COVID-inspired living visualizations, so not every tool was intended to cover every feature recommended by Mierden/Harrison/Marshall/Kohl, but all tools cover the essential steps of adding studies, screening, and extracting and presenting some sort of review data. Table 4 in the Results section of our freely available feature assessment lays out all the options.

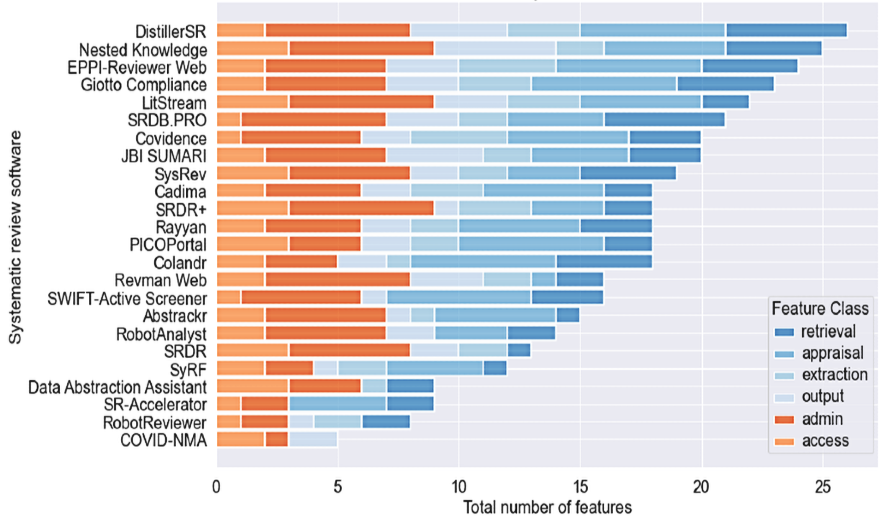

There were several tools that stood out as nearly feature-complete: Giotto Compliance offered 27 out of 30 features, DistillerSR (an Evidence Partners software) offered 26 of 30 features. Nested Knowledge (designed by the authors) offered 26 of 30 features, and EPPI-Reviewer (from the EPPI-Centre) was third with 25 of 30. LitStream, Covidence, JBI Sumari, and SRDB.PRO, and SysRev rounded out the top group of softwares, all offering 20+ features.

What is missing from these tools?

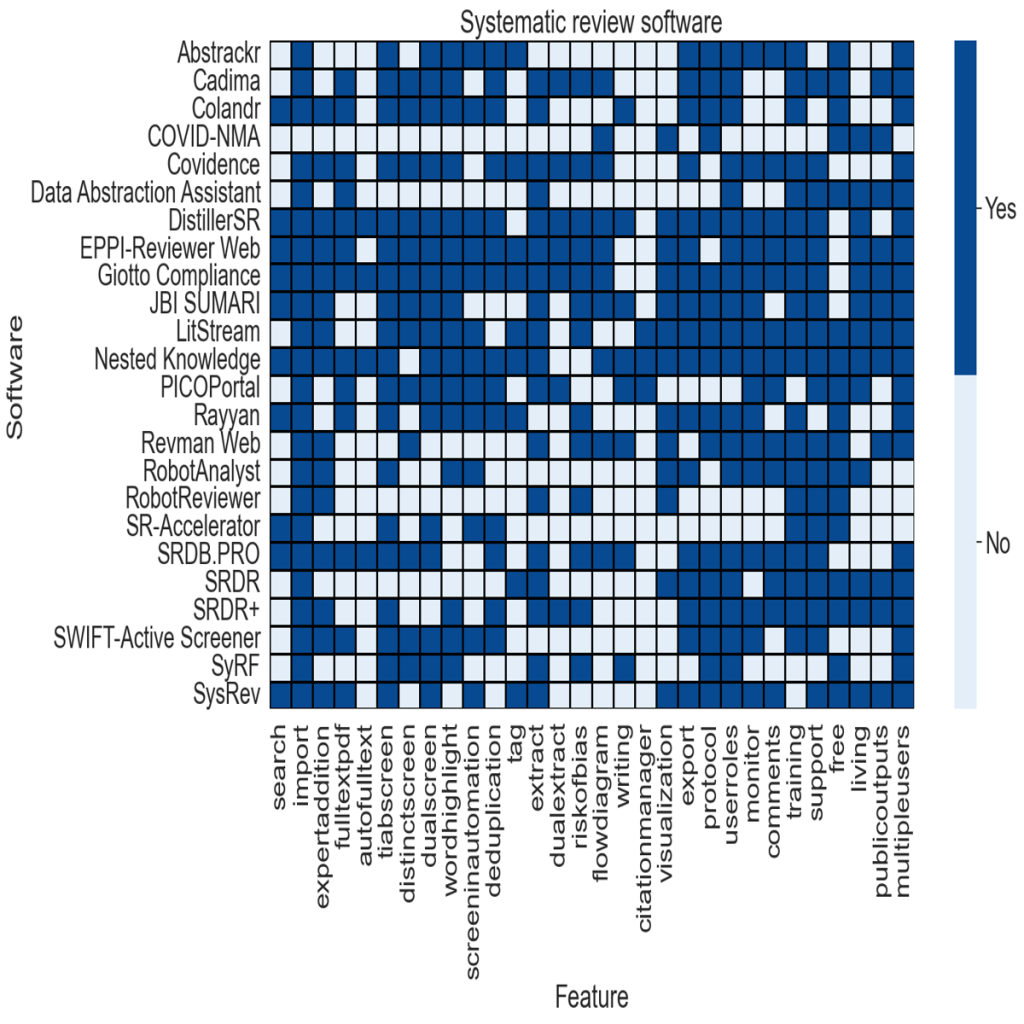

We definitely noticed some trends in what features were offered by everyone (23 of 24 softwares offered file import, for instance), and which were rare. Notably:

Overall, almost all tools did well in the appraisal/’screening’ category, so filtering references is not likely going to be the constraint. On the other hand, only 18 tools offered any extraction, and only 7 offered dual extraction, so high-quality data extraction is a major issue for almost any software. See the figure for a heat map of which features were offered (dark blue) by each software.

What about automation?

You may ask, who needs this whole dual extraction rigamarole? Like driving, isn’t it going to just be automated?

Unfortunately, automated extraction is extremely under-developed, to quote a review of automated extraction technologies, “[automation] techniques have not been fully utilized to fully or even partially automate the data extraction step of systematic review” mostly because of the stacking of three issues:

So, until we can extract and harmonize with incredible accuracy, and prove that both steps have beyond-human accuracy, extraction will remain a part of a scientist’s burden in the review process.

What features should be focused on in the future?

Living (updatable) reviews! As we noted in our Discussion, “The scientific community has made consistent demands for SR processes to be rendered updatable, with the goal of improving the quality of evidence available to clinicians, health policymakers, and the medical public … until greater provision of living review tools is achieved, reviews will continue to fall out of date and out of sync with clinical practice.”

At Nested Knowledge, living reviews are central to our mission, so we recommend that this should be the focus for any tool that does not yet offer updatability across all steps of the review. Since it’s already built into our system, we are focusing on building out:

TL;DR: Pick your Systematic Review software carefully!

Email us: support@nested-knowledge.com or click the button below. We’d love to hear from you.